It costs what?! A few things to know before you develop with Gemini

Understand the financial implications of developing with Gemini, from per-token pricing to Denial-of-Wallet attacks, and learn how to protect your budget.

Understand the financial implications of developing with Gemini, from per-token pricing to Denial-of-Wallet attacks, and learn how to protect your budget.

2025 is poised to be the year of agentic AI. We’re moving beyond chatbots and into an era where AI can interact directly with other systems, making …

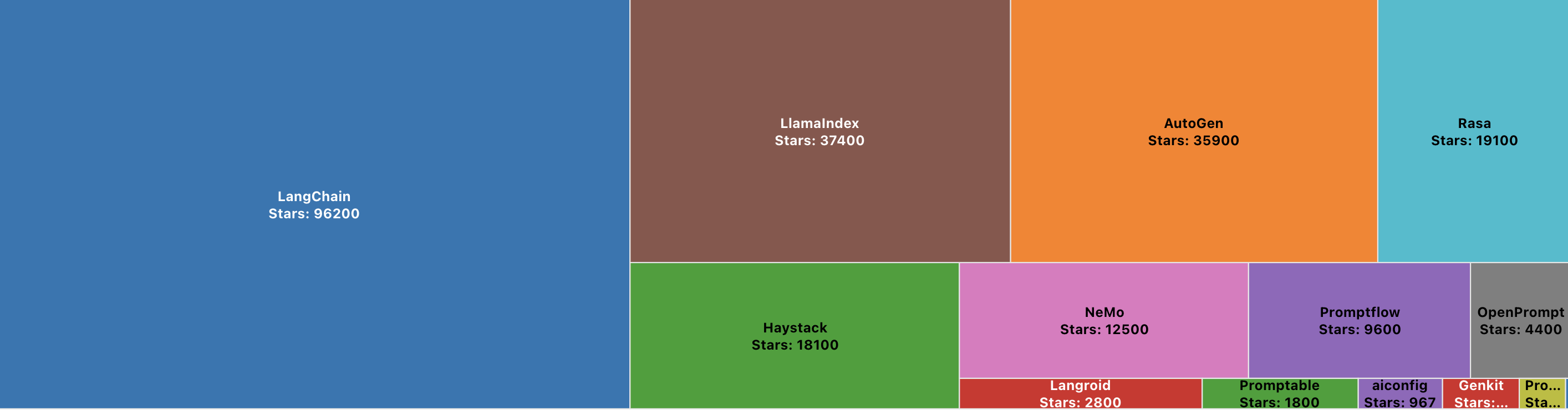

On this page we explore the top LLM frameworks, broken down by language, github stars and other popularity measures

How can you test the safety of an LLM app? Read on to uncover techniques you can use for LLM application security evaluation.

Learn effective strategies for prompt security. Secure your prompts for LLMs using prompt defense techniques to prevent adversarial attacks and ensure safe and secure interactions.

Learn about adverserial attacks, what they are and three types - model inference, model evasion and indirect prompt injection.

Learn about prompt injection and indirect prompt injection - major AI security threats, and how it affects LLM applications.