4 Essential Authorisation Strategies for Agentic AI

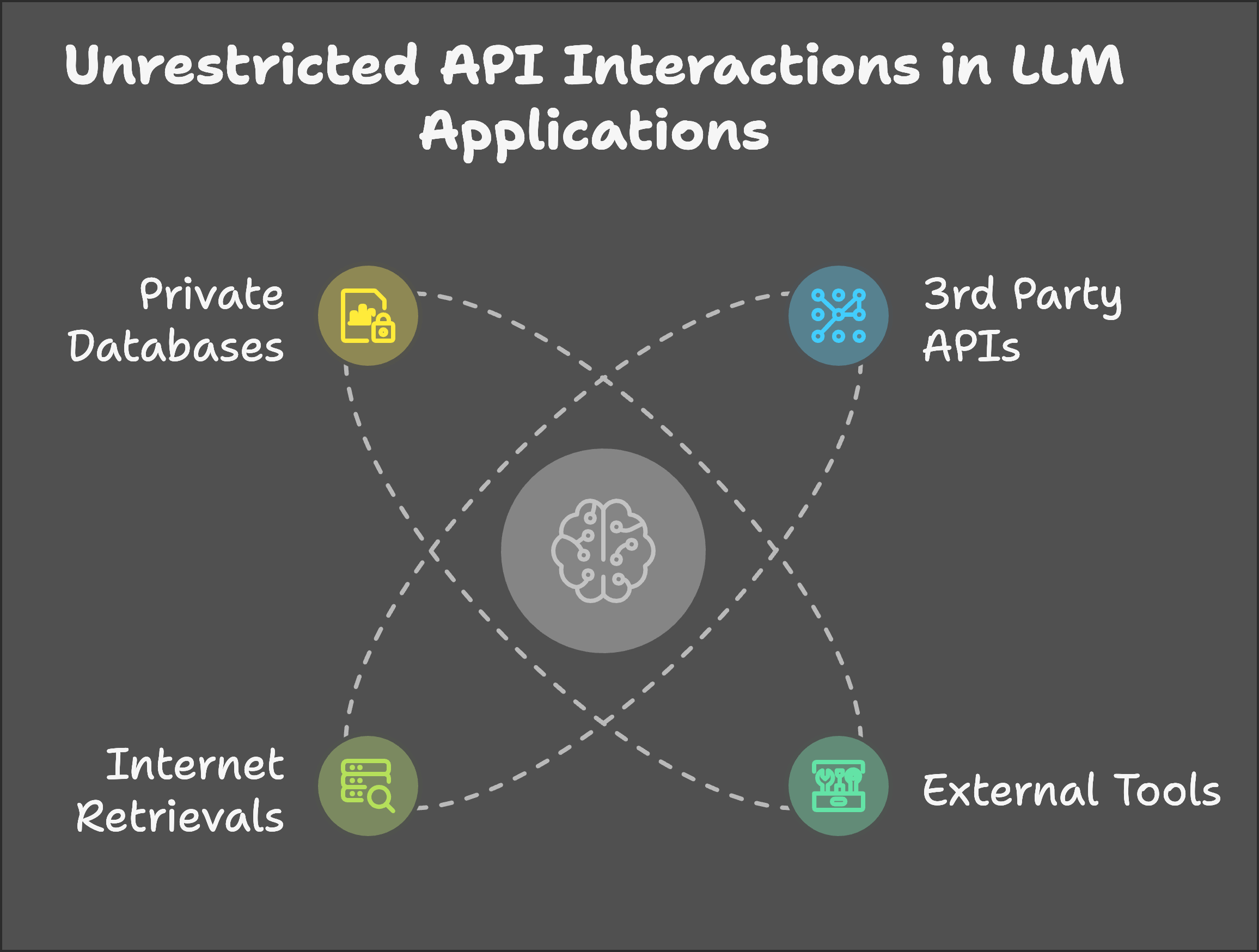

2025 is poised to be the year of agentic AI. We’re moving beyond chatbots and into an era where AI can interact directly with other systems, making decisions and taking actions with minimal human intervention. This shift unlocks incredible potential, but also introduces new security challenges. With vulnerabilities like prompt injection on the rise, where malicious actors can hijack AI functionality, we need robust safeguards to ensure these powerful agents remain under our control.

With prompt injection unlikely to ever be fully solved, we should assume that LLMs - especially public facing ones - are always going to be compromised and secure the systems with that in mind. For this, authorisation is key. It defines what an AI is allowed to do, preventing it from becoming an agent of chaos.

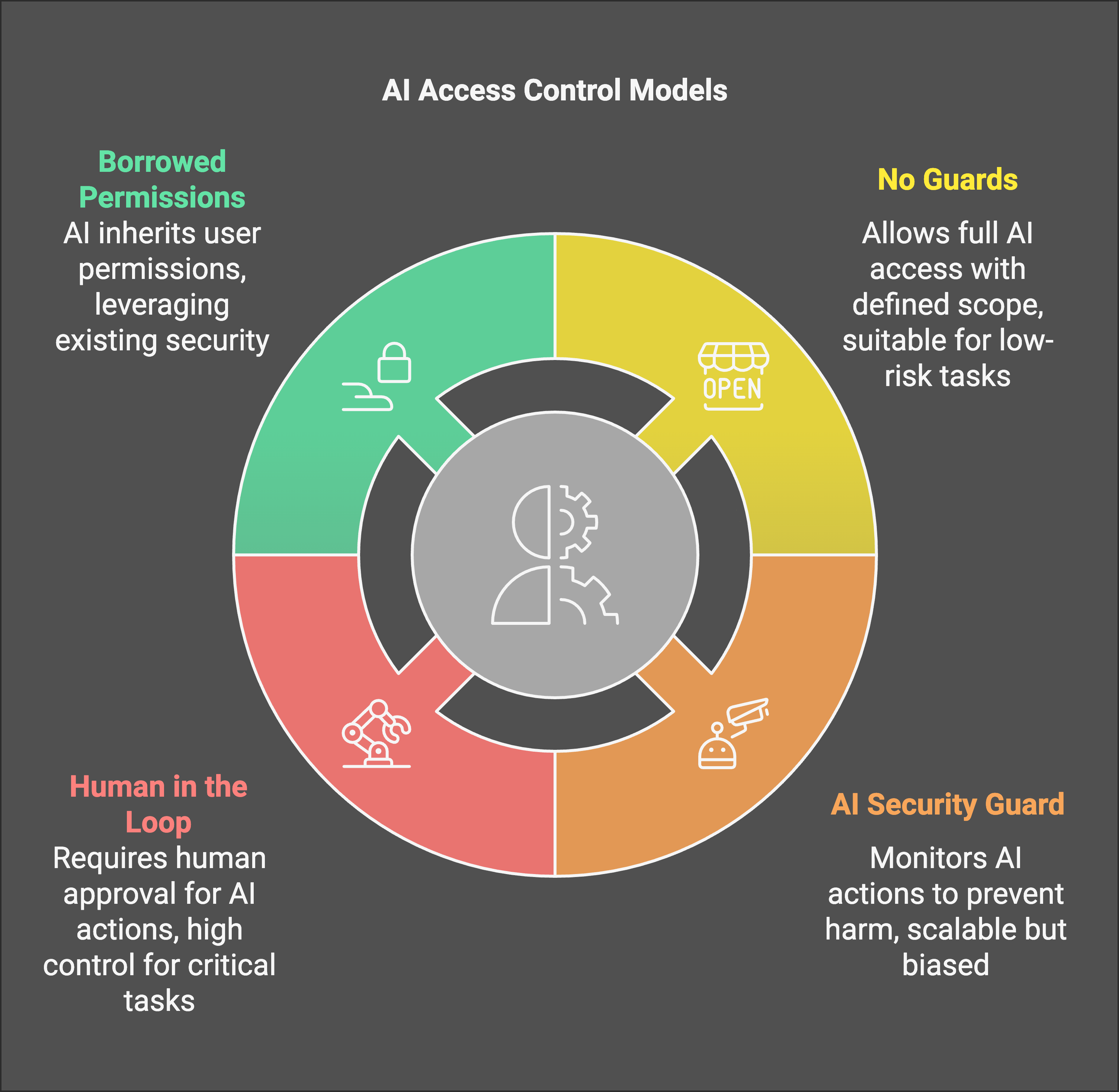

Authorisation Strategies for LLM Agents

These are the 4 core strategies to consider. You are likely using one or a combination of these when developing apps already, but being aware and making deliberate decisions about which one is the first step to more secure applications

No Guards (Full Access)

This strategy grants the AI complete freedom within a predefined scope. It can access specific data, execute a limited set of commands, and make decisions without any further checks or approvals. This approach can be suitable for certain types of agentic AI, particularly those dealing with less critical tasks, operating in controlled environments, or those where experimentation and exploration are prioritised.

- Benefits: This approach maximises flexibility and can foster innovation by allowing the AI to explore a wider range of possibilities. It can be particularly useful for tasks like content generation, game playing, or simulations where the consequences of unintended actions are minimal.

- Risks: Even with seemingly harmless applications, unrestricted AI could still lead to unintended consequences, such as generating inappropriate content, making erroneous decisions, or being exploited for malicious activities.

- Example: A marketing team uses an AI writing assistant to brainstorm social media campaigns. The AI has full access to the company’s brand guidelines and product information but is restricted from accessing sensitive financial data.

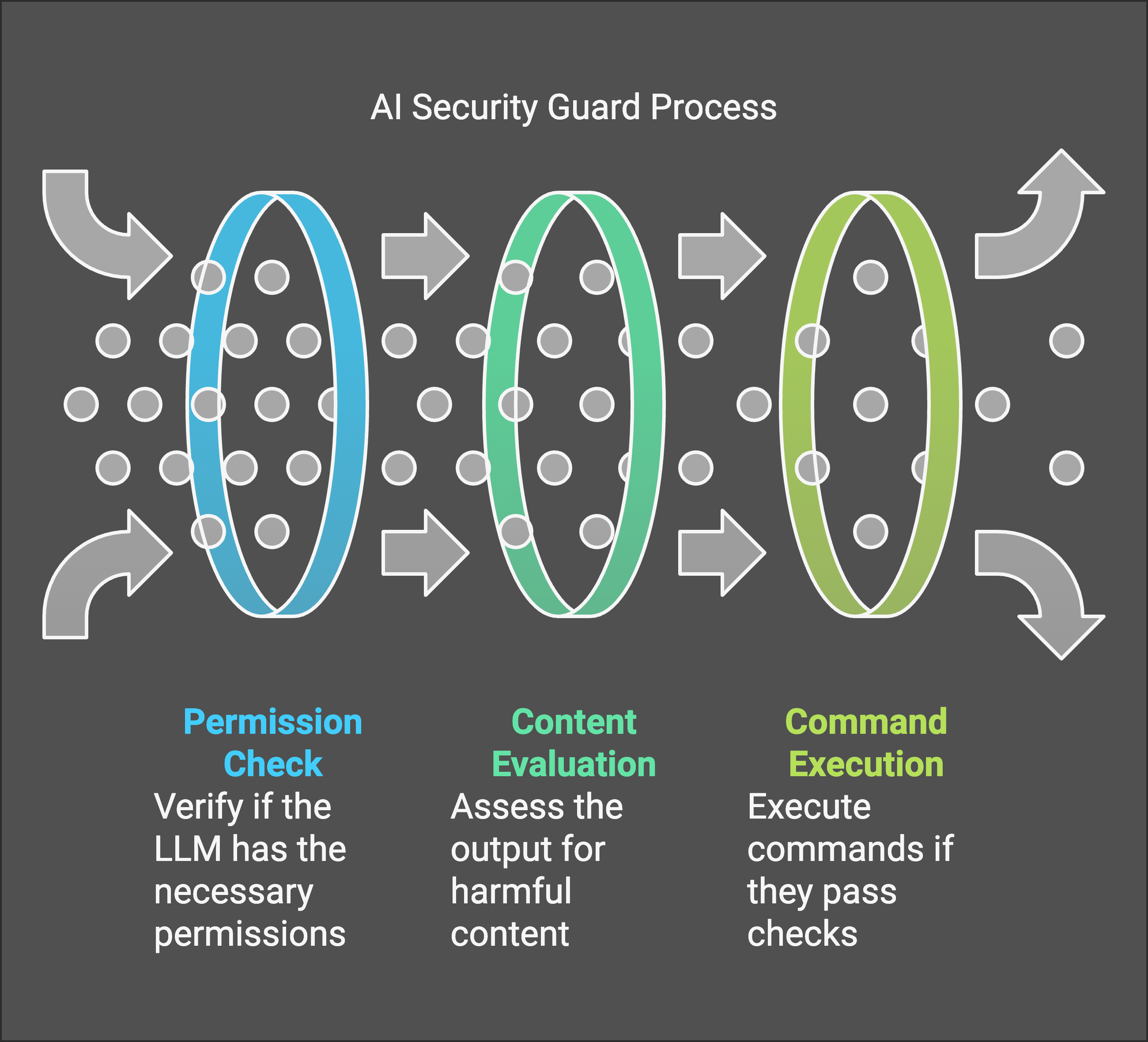

AI Security Guard

This strategy employs a separate AI system to act as a security layer, monitoring the actions of the primary agentic AI and blocking any potentially harmful activities. This approach leverages the strengths of AI itself to provide scalable, real-time monitoring and adaptive security that can evolve with the changing threat landscape.

- Benefits: This approach offers the potential for highly scalable and adaptive security. Security AI can continuously learn and improve its ability to detect and prevent risky behavior.

- Challenges: Relying solely on AI for security has its limitations. The security AI itself could be vulnerable to attacks, and mistakes or bypasses can be difficult to test and open to novel attacks. Explainability is also a concern – it can be difficult to understand why a security AI made a particular decision further making testing harder. (Learn more about evaluating the safety and security of LLM Applications)

- Example: An investment firm uses an AI to manage a portfolio of stocks. A second AI monitors the trading activity, flagging any suspicious transactions or trades that deviate significantly from the established risk profile.

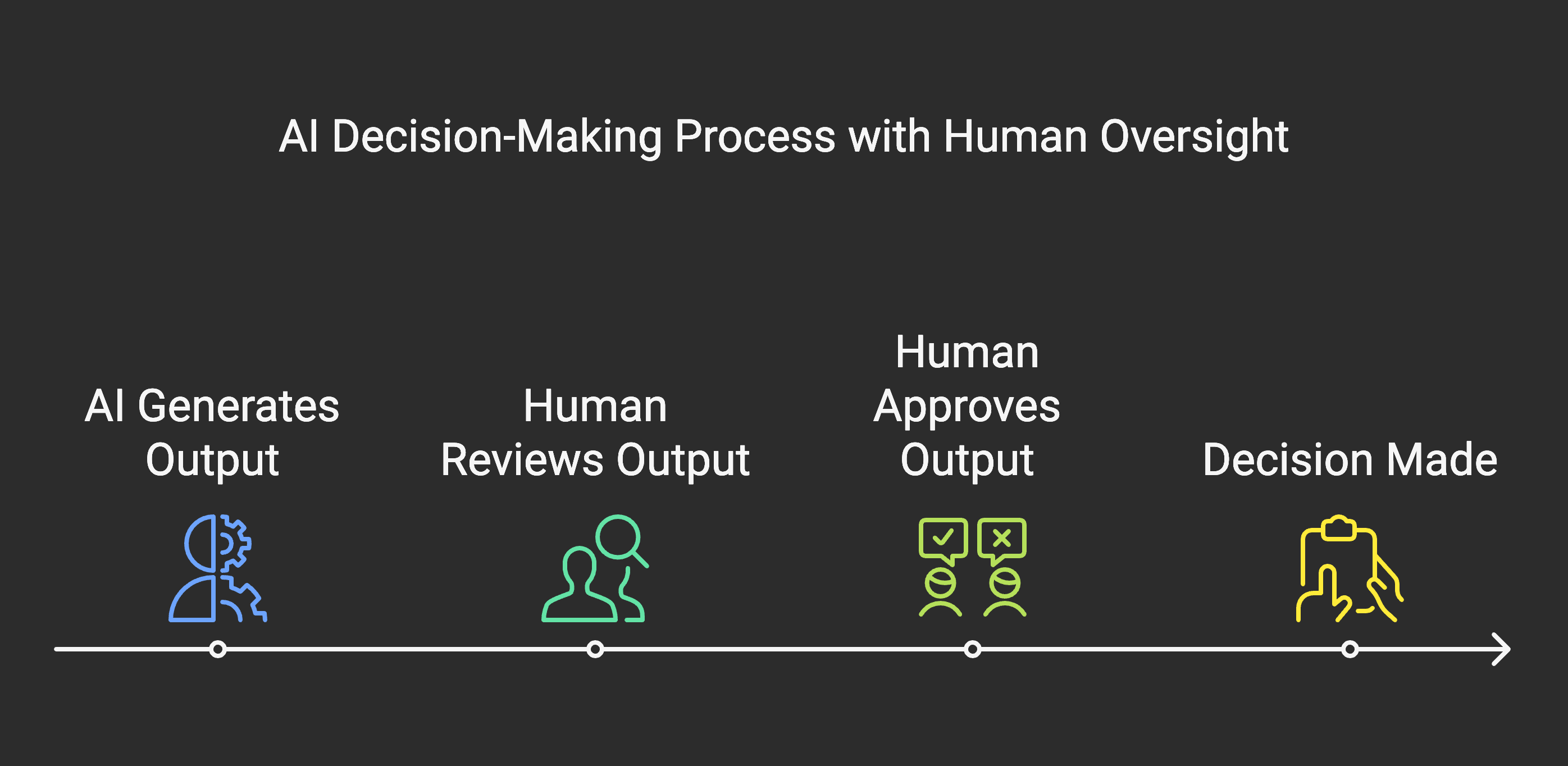

Human in the Loop

This strategy places human operators at the heart of the authorisation process. Human operators review and approve the AI’s proposed actions before they are executed. This provides the strongest level of control, allowing for human judgment, ethical considerations, and common sense to be applied to AI decision-making.

- Benefits: Human oversight offers robust security and ensures that critical decisions are aligned with human values and ethical guidelines. It also provides an opportunity for continuous improvement through feedback and learning from human intervention.

- Challenges: While human oversight offers robust security, it can also introduce bottlenecks and scalability challenges. In many cases, adding a human in the loop is not feasible, or defies the purpose of using AI at all

- Example: A hospital uses an AI to analyse medical images and assist with diagnoses. A radiologist reviews the AI’s findings before a treatment plan is finalised.

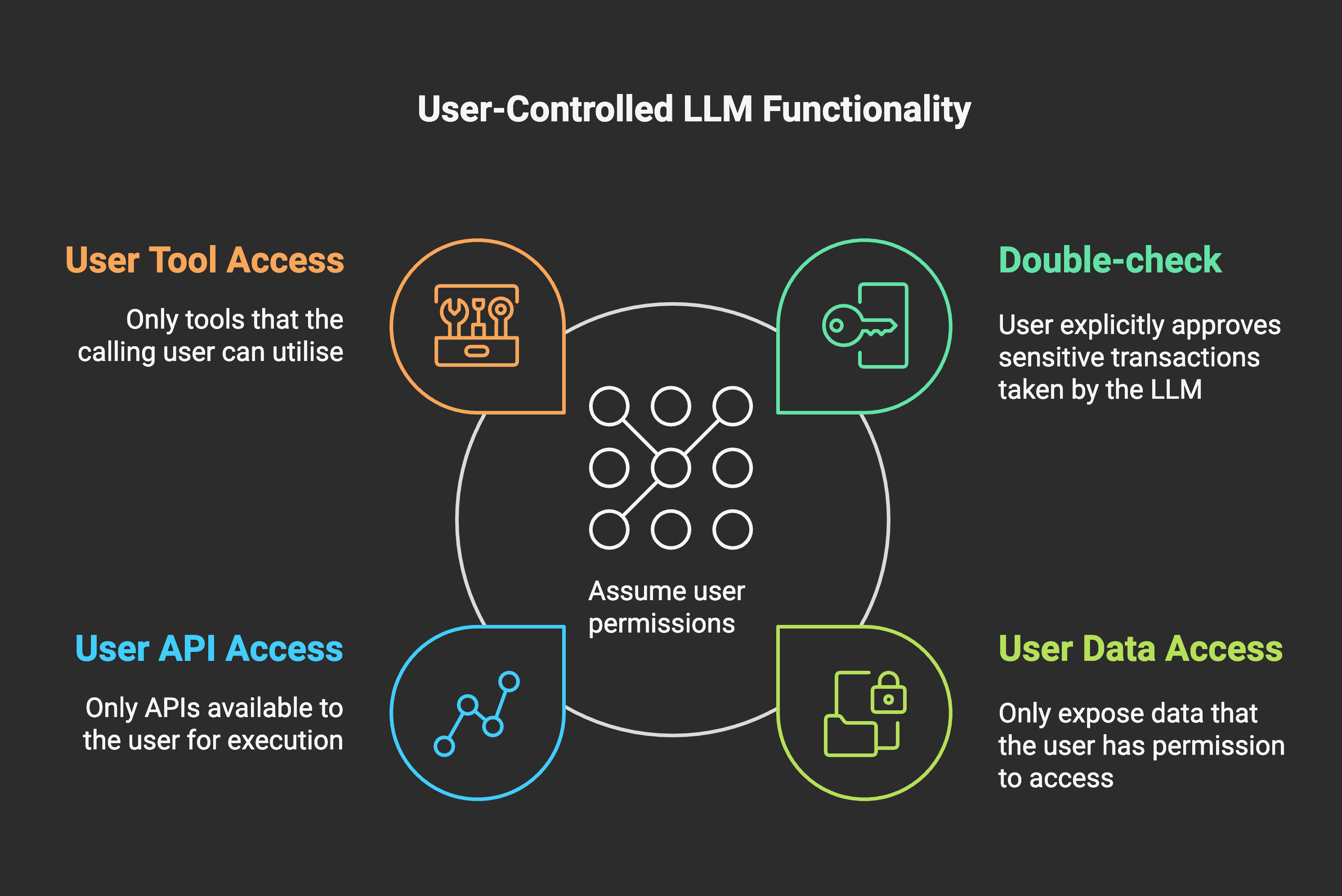

Borrowed Permissions

This strategy integrates the AI into existing security frameworks by granting it the same permissions as the user who invoked it. This means the AI can only access the data and perform the actions that the user is authorised to do. This approach leverages existing security infrastructure and access control mechanisms, simplifying authorisation management and limiting a number of risks like data leaks. In addition, a good practice when using this technique is for the user to approve specific actions before an LLM executes - for example, before transferring money or sending a tweet.

- Benefits: This approach simplifies authorisation management, ensures clear accountability, and aligns AI actions with established security policies. It’s a practical and efficient way to integrate AI into existing workflows without requiring significant changes to security infrastructure.

- Considerations: The effectiveness of this strategy depends on the strength and granularity of the existing permission system. It may not be suitable for all applications, especially those where the AI needs to perform actions beyond the user’s typical permissions.

- Example: A project manager uses an AI assistant to schedule meetings and manage tasks. The AI can only access the projects and calendars that the project manager has permission to view.

Choosing the Right Strategy

The optimal strategy depends on the AI’s capabilities, potential risks, and the organisation’s risk tolerance. Balancing innovation with robust security will be tough, and the trade-offs you make need to be carefully considered as part of the overall risk your app poses - but as more systems become integrated with LLMs, AI Agentic security will only grow in importance.